About

Projects

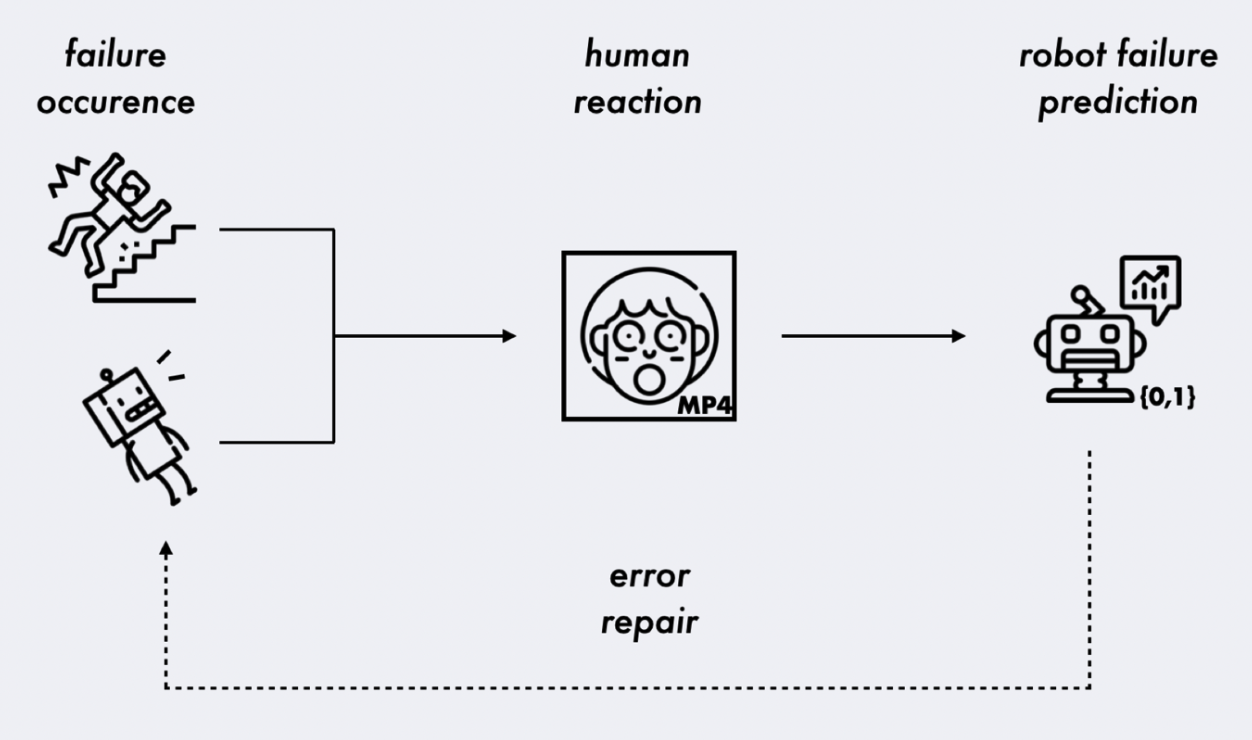

The Bystander Affect Detection (BAD) Dataset for Failure Detection in HRI

We introduce the Bystander Affect Detection dataset -- a dataset of videos of bystander reactions to videos of failures. This dataset includes 2452 human reactions to failure, collected in contexts that approximate in-the-wild data collection -- including natural variances in webcam quality, lighting, and background. Our video dataset may be requested for use in related research projects. As the dataset contains facial video data of our participants, access can be requested along with the presentation of a research protocol or data use agreement that protects participants. This project is part of a collaborative research effort between Cornell Tech (PI: Associate Professor Wendy Ju) and Accenture Labs. Read our BAD Dataset paper here: https://ieeexplore.ieee.org/document/10342442. Read our larger literature and framework on using social cues to detect task failures here: https://arxiv.org/abs/2301.11972. Request access to the BAD dataset by sending a message through the Qualitative Data Repository (best accessible through Google Chrome): https://data.qdr.syr.edu/dataset.xhtml?persistentId=doi:10.5064/F6TAWBGS.

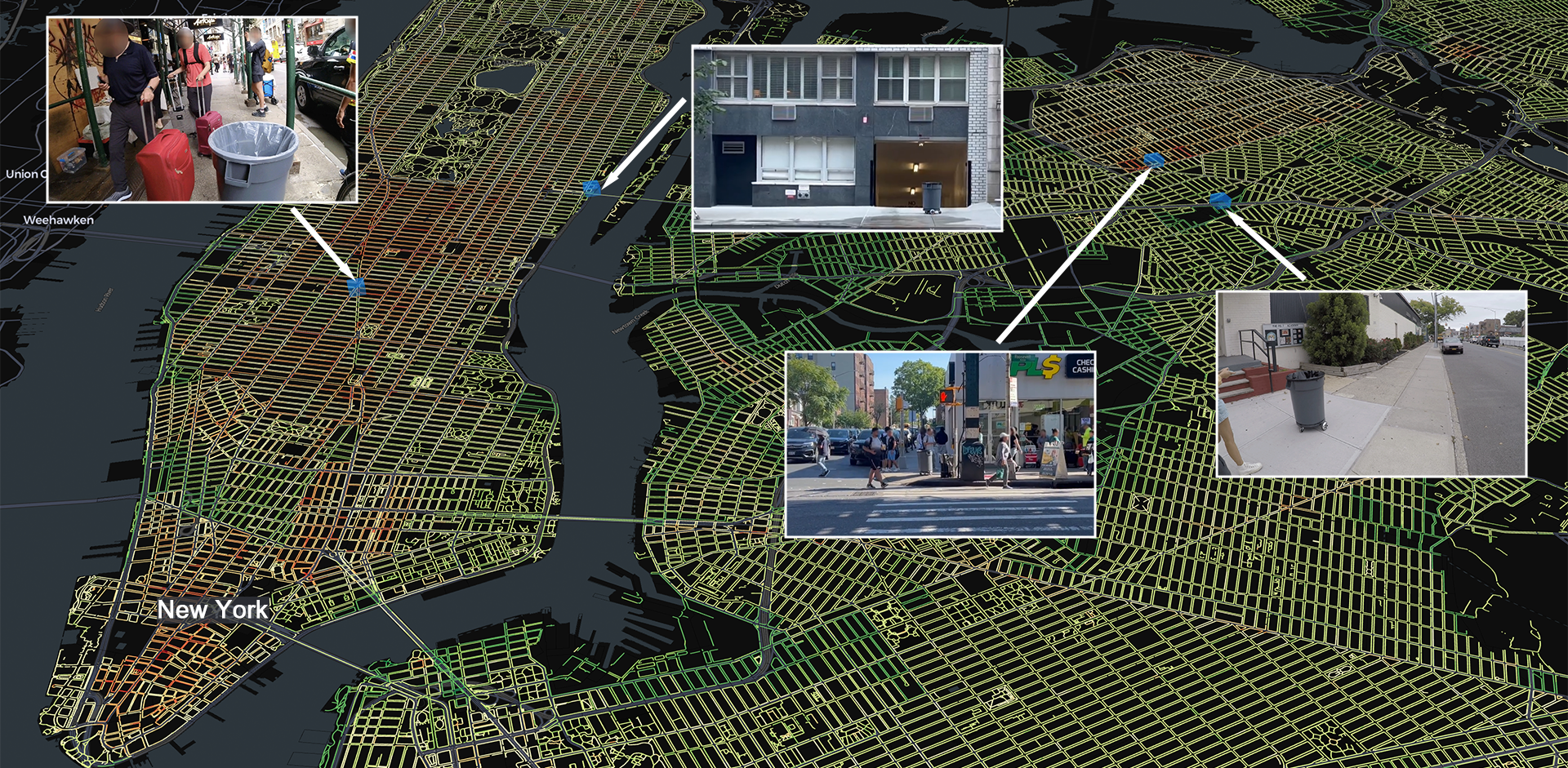

The Robotability Score

The Robotability Score (R) is a novel metric that quantifies how suitable urban environments are for autonomous robot navigation. Through expert interviews and surveys, we've developed a standardized framework for evaluating urban landscapes to reduce uncertainty in robot deployment while respecting established mobility patterns. Streets with high Robotability are both more navigable for robots and less disruptive to pedestrians. We've constructed a proof-of-concept Robotability Score for New York City using a wealth of open datasets from NYC OpenData, and inferred pedestrian distributions from a dataset of 8 million dashcam images taken around the city in late 2023.